A wealth of experimental data supports the idea that synaptic transmission can be potentiated or depressed, depending on neuronal stimulation, and that this change of at the synapse is a long-lasting effect responsible for learning and memory (LTP/LTD paradigm).What is new is to assume that synaptic and neural plasticity also requires conditions which are not input-dependent (i.e. dependent on synaptic stimulation, or even neuromodulatory activation) but instead dependent on the ‚internal state‘ of the pre- or postsynaptic neuron.

Under such a model, for plasticity to happen after stimulation it must meet with a readiness on the part of the cell, the presynapse or the postsynaptic site. We may call this conditional plasticity to emphasize that conditions deriving from the internal state of the neural network must be met in order for plasticity to occur. A neural system with conditional plasticity will have different properties from current neural networks which use unconditional plasticity in response to every stimulation. The known Hebbian neural network problem of ‘preservation of the found solution’ should become much easier to solve with conditional plasticity. Not every activation of a synapse leaves a trace. Most instances of synaptic transmission have no effect on memory. Existing synaptic strengths in many cases remain unaltered by transmission (use of the synapse), unless certain conditions are met. Those conditions could be an unusual strength of stimulation of a single neuron, a temporal sequence of (synaptic and neuromodulatory) stimulations that match a conditional pattern, a preconditioned high readiness for plastic change, e.g. by epigenetics. However, it is an open question whether using conditional plasticity in neural network models will help with the tasks of conceptual abstraction and knowledge representation in general, beyond improved memory stability.

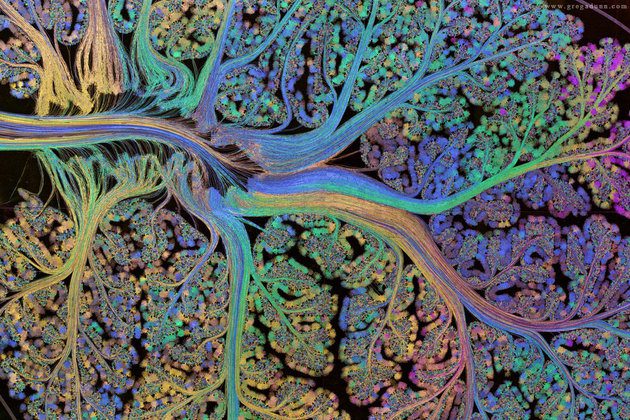

The ‚readiness‘ of the cell for plastic changes is a catch-all term for a very complex internal processing scheme. There is, for instance, the activation state of relevant kinases in protein signalling, such as CAMKII, PKA, PKC, and ERK, the NF-κB family of transcription factors, CREB, BDNF, factors involved in glucocorticoid signaling, c-fos etc., which can all be captured by dynamical models (Refs), but which generate prohibitively complex models with hundreds of species involved and little opportunity for generalization. That is not even sufficient. There are epigenetic effects like histone modification, which play a role in transcription, and which would require a potentially large number of data to be dynamically modeled as well. However it is known that non-specific drugs like HDAC inhibitors, enhancing gene transcription via increasing histone acetylation, improve learning in general.

This underscores the idea that neurons may receive a ‘readiness potential’ or threshold, a numeric value, which indicates the state of the cell as its ability to engage in plasticity events. Plasticity events originate from the membrane, by strong NMDA or L-type channel mediated calcium influx. Observations have shown that pharmacological blockade of L-type VSCCs as well as chelation of calcium in close proximity to the plasma membrane inhibits immediate-early gene induction, i.e. activation of cellular plasticity programs. If we model readiness as a threshold value, the strength of calcium input may be less than or more than this threshold to induce transcription plasticity. If we model readiness as a continuous value, we may integrate values over time to arrive at a potentially more accurate model.

To simplify we could start with a single scalar value of plasticity readiness, specific for each neuron, but dynamically evolving, and explore the theoretical consequences of a neural network with internal state variables. We‘d be free to explore various rules for the dynamic evolution of the internal readiness value.