I don’t think neural networks are still in their infancy, I think they are moribund. Some say, they hit a roadblock. AI used to be based on rules, symbols and logic. Then probability and statistics came along, and neural networks were created as an efficient statistical paradigm. But unfortunately, the old AI (GOFAI) was discontinued, it was replaced by statistics. Since then ever more powerful computers and ever more data came along, therefore statistics, neural networks (NN), machine learning seemed to create value, even though they were based on simple concepts from the late eighties, early nineties or even earlier. We failed to develop, success was easy. Some argue for hybrid models, combining GOFAI and NN. But that was tried early on, and it wasn’t very successful. What we now need is a new, and deeper understanding of what the brain actually does. Because it obviously does symbol manipulation, it does logic, it does math. Most importantly, we humans learned to speak, using nothing better than a mammalian brain (with a few specializations). I believe there is a new paradigm out there which can fulfill these needs: language, robotics, cognition, knowledge creation. I call it the vertical-horizontal model: a model of neuron interaction, where the neuron is a complete microprocessor of a very special kind. This allows to build a symbolic brain. There will be a trilogy of papers to describe this new paradigm, and a small company to build the necessary concepts. At the present time, here is a link to an early draft, hard to read, not a paper, more a collection right now, but ready for feedback at my email! I’ll soon post a summary here as well.

Category Archives: network science

Cocaine Dependency and restricted learning

Substantial work (NasifFJetal2005a, NasifFJetal2005b , DongYetal2005 , HuXT2004, Marinellietal2006) has shown that repeated cocaine administration changes the intrinsic excitability of prefrontal cortical (PFC) neurons (in rats), by altering the expression of ion channels. It downregulates voltage-gated K+ channels, and increases membrane excitability in principal (excitatory) PFC neurons.

An important consequence of this result is the following: by restricting expression levels of major ion channels, the capacity of the neuron to undergo intrinsic plasticity (IP) is limited, and therefore its learning or storage capacity is reduced.

Why is IP important?

It is often assumed that the “amount” of information that can be stored by the whole neuron is restricted compared to each of its synapses, and therefore IP cannot have a large role in neural computation. This view is based on a number of assumptions, namely (a) that IP is only expressed by a single parameter such as a firing threshold or a bit value indicating internal calcium release, (b) that IP could be replaced by a “bias term” for each neuron, essentially another parameter on a par with its synaptic parameters and trainable along with these (c) at most, that this bias term is multiplicative, not additive like synaptic parameters, but still just one learnable parameter and (d) that synapses are independently trainable, on the basis of associative activation, without a requirement of the whole neuron to undergo plasticity. Since the biology of intrinsic excitability and plasticity is very complex, there are very many aspects of it, which could be relevant in a neural circuit (or tissue) and it is challenging to extract plausible components which could be most significant for IP – it is certainly a fruitful area for further study.

In our latest paper we challenge mostly (d), i.e. we advocate a model, where IP implies localist SP (synaptic plasticity), and therefore the occurrence of SP is tied to the occurrence of IP in a neuron. In this sense the whole neuron extends control of plasticity over its synapses, in this particular model, over its dendritic synapses. It is well-known that some neurons, such as in dentate gyrus, exhibit primarily presynaptic plasticity, i.e. control over axonal synapses (mossy fiber contacts onto hippocampal CA3 neurons), but we have not focused on this question concerning cortex from the biological point of view. In any case, if this model captures an important generalization, then cocaine dependency leads to reduced IP, and, as a consequence reduced SP, at a principal neuron’s site. If the neuron is reduced in its ability to learn, i.e. to adjust its voltage-gated K+ channels, such that it operates with heightened membrane excitability, then its dendritic synapses should also be restricted in their capacity to learn (for instance to undergo LTD).

As a matter of fact, a more recent paper (Otisetal2018) shows that if we block intrinsic excitability during recall in a specific area of the PFC (prelimbic PFC), memories encoded in this area are actually prevented from becoming activated.

Heavy-tailed distributions and hierarchical cell assemblies

In earlier work, we meticulously documented the distribution of synaptic weights and the gain (or activation function) in many different brain areas. We found a remarkable consistency of heavy-tailed, specifically lognormal, distributions for firing rates, synaptic weights and gains (Scheler2017).

Why are biological neural networks heavy-tailed (lognormal)?

Cell assemblies: Lognormal networks support models of a hierarchically organized cell assembly (ensembles). Individual neurons can activate or suppress a whole cell assembly if they are the strongest neuron or directly connect to the strongest neurons (TeramaeJetal2012).

Storage: Sparse strong synapses store stable information and provide a backbone of information processing. More frequent weak synapses are more flexible and add changeable detail to the backbone. Heavy-tailed distributions allow a hierarchy of stability and importance.

Time delay of activation is reduced because strong synapses activate quickly a whole assembly (IyerRetal2013). This reduces the initial response time, which is dependent on the synaptic and intrinsic distribution. Heavy-tailed distributions activate fastest.

Noise response: Under additional input, noise or patterned, the pattern stability of the existing ensemble is higher (IyerRetal2013, see also KirstCetal2016). This is a side effect of integration of all computations within a cell assembly.

Why hierarchical computations in a neural network?

Calculations which depend on interactions between many discrete points (N-body problems, Barnes and Hut 1986), such as particle-particle methods, where every point depends on all others, lead to an O(N^2) calculation. If we supplant this by hierarchical methods, and combine information from multiple points, we can reduce the computational complexity to O(N log N) or O(N).

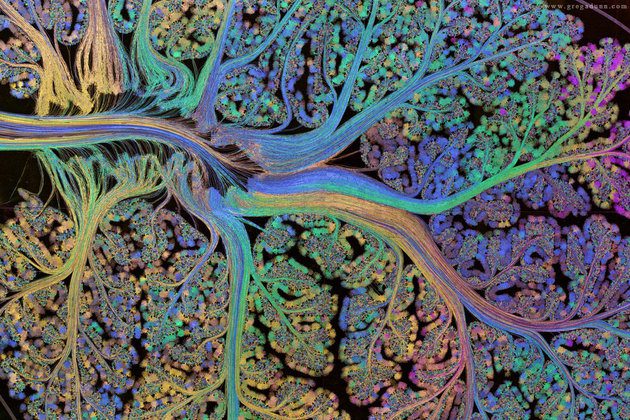

Since biological neural networks are not feedforward but connect in both forward and backward directions, they have a different structure from ANNs (artificial neural networks) – they consist of hierarchically organised ensembles with few wide-range excitability ‘hub’ neurons and many ‘leaf’ neurons with low connectivity and small-range excitability. Patterns are stored in these ensembles, and get accessed by a fit to an incoming pattern that could be expressed by low mutual information as a measure of similarity. Patterns are modified by similar access patterns, but typically only in their weak connections (else the accessing pattern would not fit).