Epigenetic modification is a powerful mechanism for the induction, the expression and persistence of long-term memory.

For long-term memory, we need to consider diverse cellular processes. These occur in neurons from different brain regions (in particular hippocampus, cortex, amygdala) during memory consolidation and recall. For instance, long-term changes in kinase expression in the proteome, changes in receptor subunit composition and localization at synaptic/dendritic membranes, epigenetic modifications of chromatin such as DNA methylation and histone methylation in the nucleus, and the posttranslational modifications of histones, including phosphorylation and acetylation, all these play a role. Histone acetylation is of particular interest because a number of known medications exist, which function as histone deacetylase inhibitors (HDACs), i.e. have a potential to increase DNA transcription and memory (more on this in a later post).

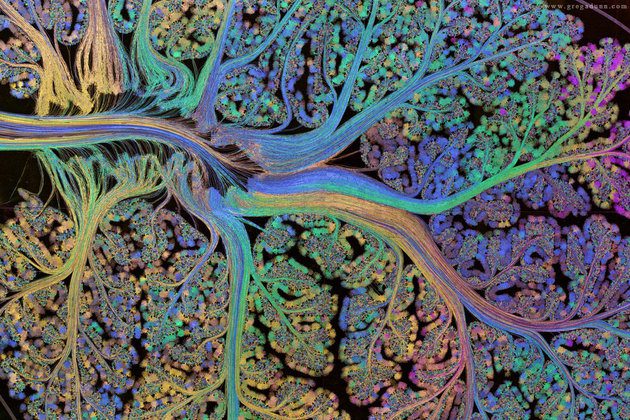

Epigenetic changes are important because they define the internal conditions for plasticity for the individual neuron. They underlie for instance, kinase or phosphatase-mediated (de)activations of enzymatic proteins and therefore influence the probability of membrane proteins to become altered by synaptic activation.

Among epigenetic changes, DNA methylation typically acts to alter, often to repress, DNA transcription at cytosine, or CpG islands in vertebrates. DNA methylation is mediated by enzymes such as Tet3, which catalyses an important step in the demethylation of DNA. In dentate gyrus of live rats, it was shown that the expression of Tet3 is greatly increased by LTP – synaptically induced memorization – , suggesting that certain DNA stretches were demethylated [5], and presumably activated. During induction of LTP by high frequency electrical stimulation, DNA methylation is changed specifically for certain genes known for their role in neural plasticity [1]. The expression of neural plasticity genes is widely correlated with the methylation status of the corresponding DNA .

So there is interesting evidence for filtering the induction of plasticity via the epigenetic landscape and modifiers of gene expression, such as HDACs. Substances which act as histone deacetylase inhibitors (HDACs) increase histone acetylation. An interesting result from research on fear suggests that HDACs increase some DNA transcription, and enhance specifically fear extinction memories [2], [3],[4].