Memory has a physical presence in the brain, but there are no elements which permanently code for it.

Memory is located – among other places – in dendritic spines. Spines are being increased during learning and they carry stimulus or task-specific information. Ablation of spines destroys this information (Hayashi-Takagi A2015). Astrocytes have filopodia which are also extended and retracted and make contact with neuronal synapses. The presence of memory in the spine fits to a neuron-centric view: Spine protrusion and retraction are guided by cellular programs. A strict causality such that x synaptic inputs cause a new spine is not necessarily true, as a matter of fact highly conditional principles of spine formation or dissolution could hold, where the internal state of the neuron and the neuron’s history matters. The rules for spine formation need not be identical to the rules for synapse formation and weight updating (which depend on at least two neurons making contact).

A spine needs to be there for a synapse to exist (in spiny neurons), but once it is there, clearly not all synapses are alike. They differ in the amount of AMPA presence and integration, and other receptors/ion channels as well. For instance, Sk-channels serve to block off a synapse from further change, and may be regarded as a form of overwrite protection. Therefore, the existence or lack of a spine is the first-order adaptation in a spiny neuron, the second-order adaptation involves the synapses themselves.

However, spines are also subject to high variability, on the order of several hours to a few days. Some elements may have very long persistence, months in the mouse, but they are few. MongilloGetal2017 point out the fragility of the synapse and the dendritic spine in pyramidal neurons and ask what this means for the physical basis of memory. Given what we know about neural networks, for memory to be permanent, is it necessary that the same spines remain? Learning allows to operate with many random elements, but memory has prima facie no need for volatility.

It is most likely that memory is a secondary, ’emergent’ property of volatile and highly adaptive structures. From this perspective it is sufficient to keep the information alive, among the information-carrying units, which will recreate it in some form.

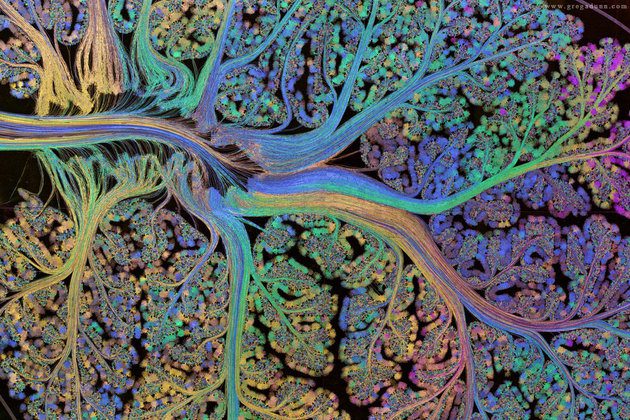

The argument is that the information is redundantly coded. So if part of the coding is missing, the rest still carries enough information to inform the system, which recruits new parts to carry the information. The information is never lost, because not all synapses, spines, neurons are degraded at the same time, and because internal reentrant processing keeps the information alive and recreates new redundant parts at the same time as other parts are lost. It is a dynamic cycling of information. There are difficulties, if synapses are supposed to carry the whole information. The main difficulty is: if all patterns at all times are being stored in synaptic values, without undue interference, and with all the complex processes of memory, forgetting, retrieval, reconsolidation etc., can this be fitted to a situation, where the response to a simple visual stimulus already involves 30-40% of the cortical area where there is processing going on? I have no quantitative model for this. I think the model only works if we use all the multiple, redundant forms of plasticity that the neuron possesses: internal states, intrinsic properties, synaptic and morphological properties, axonal growth, presynaptic plasticity.